Filter out bots from the statistics of your website in Google Analytics

Google has finally introduced the long awaited functionality in Analytics – the world’s most popular package to track website traffic. An ability to automatically exclude traffic generated by bots.

Photo by: Sergey Ivanov

Bots and spiders

Some sources indicate that over 60% of web traffic is generated by robots – a software created to visit websites automatically.

There are many types of “bots”. Some of them are “spiders” (or “crawlers”), scanning web content and following links. The most famous one is GoogleBot (and its equivalents used by other search engines) – cataloging the Internet and constantly updating the data in its gigantic database. Spiders are also used by various tools for monitoring content, brand mentions, etc.

Unfortunately, not all spiders are good. The so-called “spambots” (spammer’s robots) search websites for email addresses to add them to spammers’ mailing lists. That’s the main reason why you shouldn’t publish your email address anywhere (uncoded). Not to mention your credit card number…

More advanced bots not only read page contents but actively perform certain actions – like login, for example. This type of tool is most commonly used by hackers – allowing them to automate the task of searching for sites with weaknesses that are easy to exploit.

You can now filter out bots from the statistics of your website in Google Analytics siteimpulse.com/blogen/…

Filtering

With such a large scale of automated traffic, the website statistics are naturally biased. Until now, without advanced knowledge (and a substantial list of bots) it was not possible to isloate and ignore the traffic generated by bots.

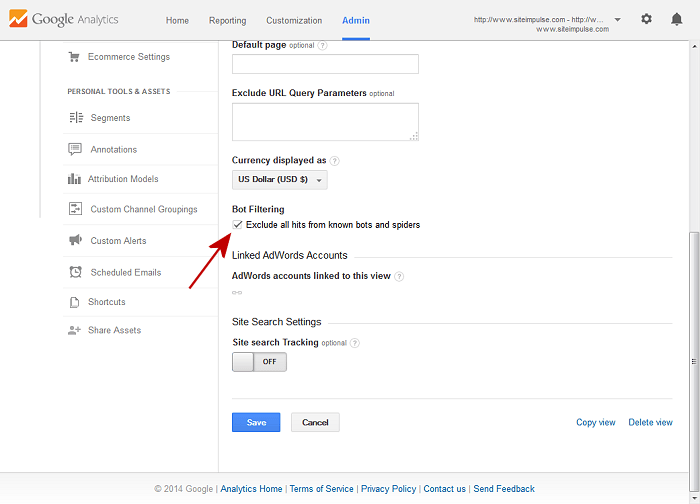

After years of waiting, a new option appeared in the Google Analytics panel: “Exclude all hits from known bots and spiders”. Note the word “known” – automatic and unambiguous identification of a robot is very difficult, therefore the functionality implemented by Google excludes bots that are present on a list. Google uses the list created by the IAB/ABC.

Unfortunately, without a deep pocket ($4,000 to $14,000) there is no way to see the list and check what bots are on it.

How to turn it on

To start filtering out bots:

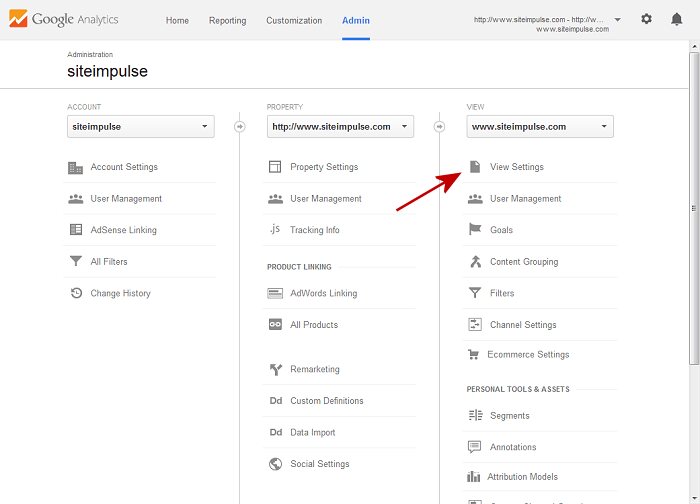

- log into Google Analytics

- select “Admin” from the top menu

- select the account, the property and the view

- in the selected view, click “View Settings”

- scroll down to the section “Bot Filtering”

- select the appropriate checkbox and save the configuration.

As I said above, robots generate a significant portion of web traffic. Therefore, don’t be surprised if after you turn on filtering, the reported traffic on your site will drop suddenly – as much as one-third.

The chart will look worse than before – but at least you’ll see information about the behavior of real (mostly) users.